Where does ‘failure’ come from? Why do some things not go as we planned? When bad things happen, at that time, it can be chaotic and appear very complex. Often, in hindsight (when the urgency has faded), we find that good people made bad decisions at that time. Most of the time such failures were not complicated and we find that most likely, anyone else put in the same position, would have made the same decision. So why do things not always go as planned?

We are going to take a very macro view of this together. I will let you fill in the blanks about situations you have encountered, where this model just seems to fit. If it doesn’t, please ‘Comment’ about your experience(s) and deviations that you observe.

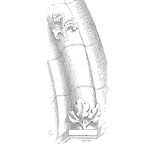

The preview above shows the general pathway from when a ‘failure’ is beginning to form and the steps it will progress through until we have to face the music and deal with its consequences. Let’s break that path down in very simplistic terms.

Most failures originate in the form of flawed systems. They can be inadequate, insufficient and oftentimes just non-existent (there were no rules to follow so we relied on our knowledge to ‘wing it’). I have listed just some examples of these cultural norms and systems, but in effect, these contribute to our reasoning processes for our decisions.

Such organizational systems are put into place to assist those that use them, make better decisions. When flaws exist in such systems, this feeds less than adequate information to the decision-maker. We refer to these ‘systems’ failures as Latent Root Causes. This is appropriate because they are latent or dormant; they are always there, but by themselves they cannot hurt anyone because they are essentially just paper (or electronic files:-).

So less than adequate information is fed to a well-intentioned decision-maker, and at this point they have to make a decision. This decision will normally come in the form of taking an action (potential error of commission) or choosing NOT to take an action (potential error of omission). The decision error itself becomes the ‘active’ error, or what activates the latent errors. Remember, at this point, all of the reasoning going on is between the ears of the decision-maker. As outsiders, we can’t see anything.

This is an important point because the decision itself will trigger a visible consequence. It may be a turn of a switch, a check on a checklist, the changing of a setting, the implementation of a procedure or a host of other possibilities…but as a result of the decision, we see the consequences now as they are visible.

At this point we can now view the cause-and-effect chain of consequences which we will call the Physical Root Causes. If people in our workplaces do not recognize/identify this chain of consequences forming and take actions to stop the chain, then eventually a bad outcome will occur that will have to be addressed.

We often hear the term ‘situational awareness’ to describe this sensory awareness to our surroundings. Oftentimes in low morale environments, such awareness is often dulled as employees because human robots and do nothing more, or less, than what is expected. They often operate as if they have blinders on and see only their work spaces. Nonetheless, High Reliability Organizations (HRO) go out of their way to train their staff on how to recognize these error chains and to take actions to stop them from progressing. This is also emphasized from the managerial oversight perspective to prevent normalization of deviance (when our norms or practices tend to slowly deviate from our standards).

If we are astute enough to identify the chain and break it, we will likely not suffer the full consequences that could have happened. We call these ‘near misses’…I call them ‘we got lucky!’ Irregardless, stopping the full consequences can likely save lives and prevent catastrophic damages.

If we are not able to stop the chain, then it will progress through what I call ‘the threshold of pain’. This means a regulatory trigger will be met and a full blown investigation will be required. At this point the suits show up and we will have no choice but to analyze the failure due to purely reactionary forces.

So to recap, flawed systems influence decision reasoning. As a result, decision errors are made that trigger visible consequences. If the chain of consequences is not stopped, then bad outcomes will likely occur and have to be dealt with.

When you reflect on this macro view, it is not industry specific. No matter where humans work, this process is likely at play. Can you cite some examples from your experience?

Bob Latino /CEO /Reliability Center, Inc./blatino@reliability.com /www.Reliability.com

Ask a question or send along a comment.

Please login to view and use the contact form.

Ask a question or send along a comment.

Please login to view and use the contact form.

Leave a Reply