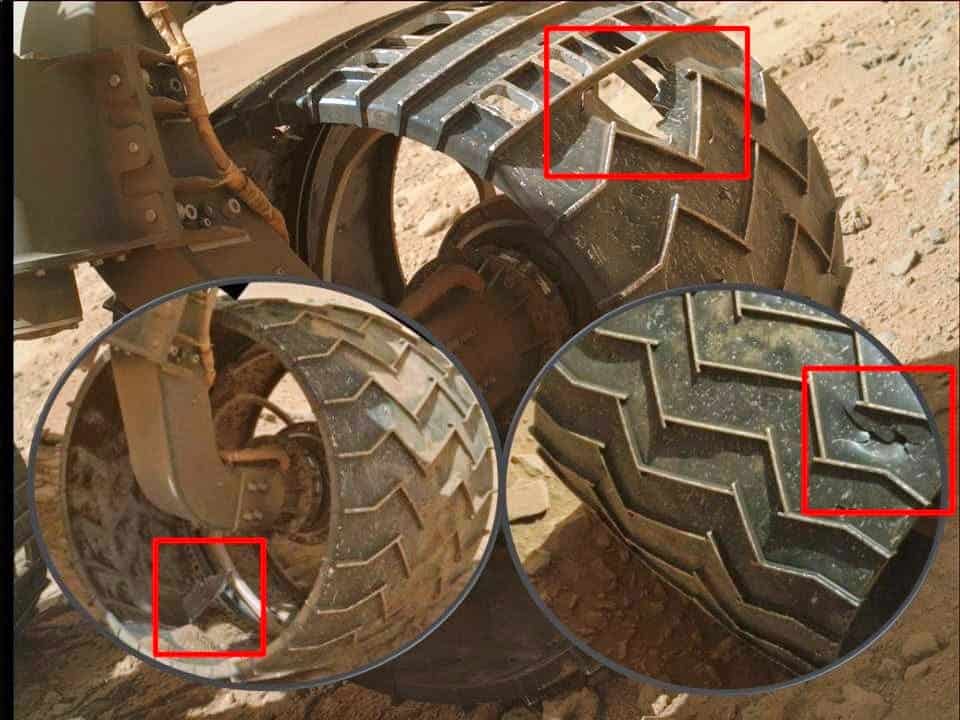

This is the wheel of the Curiosity Rover after millions of rotations on Mars.

This is how I feel after I ask customers about legacy product performance.

I am always surprised how much I feel this way every time I hear “I don’t know root cause for our common field failures,” “we band-aided this,” “this other failure mode went away on its own,” or “we screen and keep more of the bad ones in house.”

I am always surprised how much I feel this way every time I hear “I don’t know root cause for our common field failures,” “we band-aided this,” “this other failure mode went away on its own,” or “we screen and keep more of the bad ones in house.”

But the bummed feeling comes from empathy.

It indicates two things: 1. Outsiders can sometimes see obvious paths that those close cannot and 2. being emotional about data collection might be an indication I should chill out and fish or something.

It’s interesting how higher end fishing reels have larger gaps in moving components but maintain a tight feel from handle to line.

It seems like the manufacturers found a way to let the mechanism process sand and debris so that fewer cleaning/rebuild cycles are needed. This was also the reason why the AK47 was such a milestone military rifle. The Soviet engineers opened all the “unnecessary” tight tolerances so that debris wouldn’t jam components. ……..Ugh It’s hopeless

Seeing a team or individual who is trying desperately to get time or resources to do enough testing and can’t just stink. It’s a real struggle to convince management to invest in this—and for good reason.

It is a major drag on the program.

The tragedy is that in many cases a high percentage of the new product is carrying legacy technology that has been in the field for years. There are piles and piles of recorded failures, cycle runs and issued root causes just floating out there.

Most of it is around somewhere on a piece of paper, in a notebook, in a filing cabinet, or in a database, and someone in Quality collected it all and doesn’t know who to share it with or isn’t given time to compile it himself.

This gathering and compiling function likely takes a fraction of the effort and time to collect similar data from new testing.

I recommend estimating the time and resources it will take to do this internal “scavenge and scrub” and proposing it next as a resource requirement for the test program.

Without a side-by-side comparison of the new test program, the “scavenge and scrub” could appear to be an equal burden to the program. It’s the comparative ROI that makes the case:

“We have 70% legacy components in the new design.”

“The primary wear out failure modes and drivers of our top three reliability failures are linked to shared components.”

“We need about 80 man hours to collect and process the legacy data.”

” There are two high-risk new features that we can do isolated testing on and have accelerated life data in about three weeks.”

So again, look at this rover wheel picture—without accompanying data. What can you surmise?

Well, it clearly shows where potential failure from wear may be. You could also surmise that there are areas you could reduce robustness to save weight. Shape change to increase life?…………

So…this is data collection from a different planet…..What’s your excuse?

Ask a question or send along a comment.

Please login to view and use the contact form.

Ask a question or send along a comment.

Please login to view and use the contact form.

Leave a Reply