Test To Bogy Sample Sizes

Introduction

Reliability verification is a fundamental stage in the product development process. It is common for engineers to run a test to bogy (TTB). What sample size is required for a TTB?

Reliability Testing

Reliability is the probability of a part successfully functions under specified life, duty cycle and environmental conditions. Many functions are specified during the design process. Each reliability test will be focused to validate a specific function. The targeted verification level depends on the criticality of the function and potential failure modes. The life could be specified as a count of cycles, an operating time, or perhaps a mileage or mileage equivalent. The duty cycle is a description of how the device is used. Environmental stresses are generally included in the test.

The product has an unknown inherent reliability, R. The reliability test verifies that R exceeds a critical value with a specified probability, also called a confidence, C. This may be stated mathematically as

$$Pr(R>R_{Critical})=C$$

(1)

Test to Bogy

In a test to bogy, N parts are tested to one life and no failures are allowed! So how many samples are required?

Case I: N=1

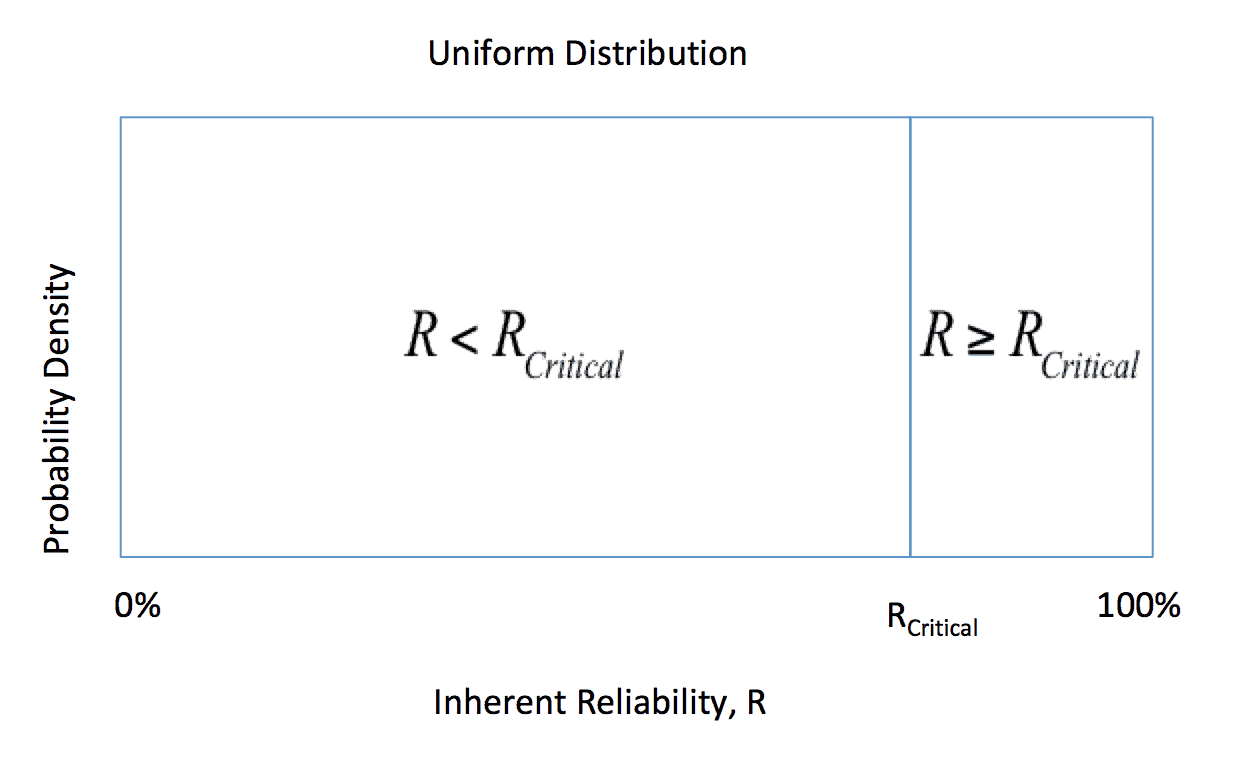

If a uniform distribution of R is assumed, any bias is avoided in the estimate of R from the test, figure 1

Figure 1

Figure 1 captures all of the possibilities for R. Note that with a high value or RCritical, the uniform distribution includes many low values of R. We would like to detect if $-R<R_{Critical}-$ or $-R \geq R_{Critical}-$.

The probability of success of one part is R and the probability of failure is 1-R. If one part passes the test, the unknown intrinsic reliability is still R. We would like to improve our chances of detecting R<RCritical. The only way to accomplish that is to increase the sample size.

Case II: N>1

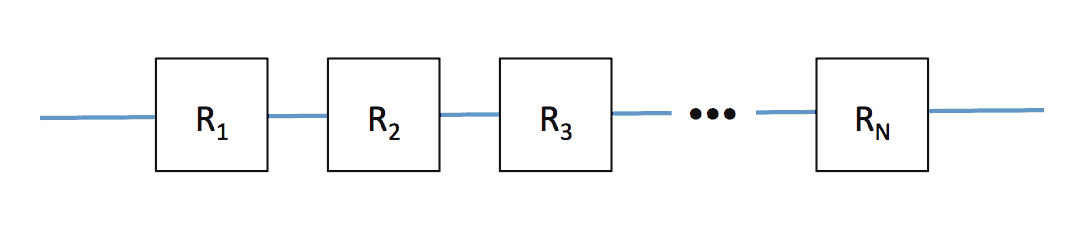

If the inherent reliability is R and N parts are tested, the N parts are a series system. A block diagram of the reliability is displayed graphically, figure 2

Figure 2

System success testing can be defined producing at least X successes out of N trials, then the binomial distribution applies,

$$Pr(x>X)=\sum_{x=X}^{x=N}{N \choose x}R^x(1-R)^{N-x}$$

(2)

When X=N and no failures are allowed, then the probability of passing the test reduces to

$$Pr(x=N)=R^N$$

(3)

Graphically, this can be displayed as a mapping from part reliability into system reliability, figure 2.

Figure 2

The graph maps the component $-R_{Critical}-$ into a critical region for the system reliability. From the graph, it can be seen that if $-R \geq R_{Critical}-$, then the probability of failing the system level test is low. Again, the probability of failing the test, 1-RN, is the confidence,

$$1-R^N=C$$

(4)

This can be rearranged to yield,

$$R^N=1-C$$

(5)

This equation is modified to calculate the samples required,

$$N=\frac{ln(1-C)}{ln(R)}$$

(6)

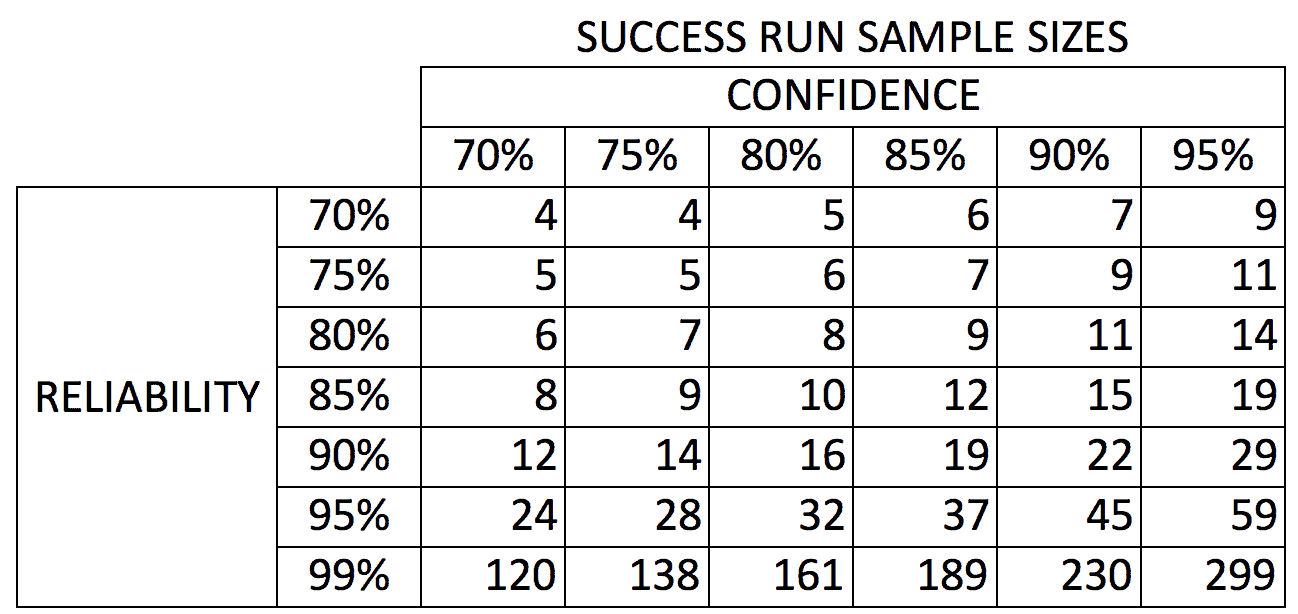

The result is rounded up to the next higher integer. Some typical values are shown in table 1,

Table 1

The sample size requirements grow in a non-linear pattern with increasing reliability and confidence verification targets. To avoid excessive testing, the targets vary according to the criticality of the product. If the part is safety critical, then high levels of reliability, like 95% or 99% are targeted. Similarly, a high confidence may be specified, which increases the sample size.

A common practice is to target a minimum of 90% reliability at 90% confidence for non-critical functions. This is sometimes abbreviated to R90/C90 or R90C90. Critical functions have higher reliability verification targets, perhaps, R95/C90 or R99/C90. Examples,

- In the electronics industry, it is easy to populate a test board with many identical parts and simultaneously conduct a reliability test. The cost per item is relatively low. An AEC test of automotive grade solid-state electronic components includes reliability tests with 77 parts from 3 different production lots, or 231 parts. This is greater than the verification to R99/C90 levels. Since electronic components are commodities used in a wide variety of products, a component failure could jeopardize an electronic module that involves safety or mobility. The highest criticality of function drives the selection of reliability verification levels.

- A car radio is not a critical component of an automobile, but any failure will be quickly notices by the customer. Generally, car radios may involve testing 22 parts to 1 bogy without failure. This is an R90C90 verification level. Note, it is relatively more expensive to create a prototype module and conduct a verification test so the targets and sample sizes are lower.

- Some items may be tested to relatively low targets, perhaps R75/C80, or 6 parts. These items could be minor convenience items or could be high-level assemblies that are very expensive to build. For example, a prototype vehicle could cost hundreds of thousands of dollars to build. The numbers of vehicles and types of vehicles employed in verification testing is highly restricted due to costs. Even crash testing to verify safety will employ only 2 or 3 vehicles.

Concerns

Reliability verification test to bogy is easy to define, but failures are not allowed. Failures provide an opportunity to determine failure modes and identify product weaknesses.

The life targets can be very high. In 10 years of operation the average retail car customer operates a vehicle for 2 hours / day, then a life test could require 7,300 hours of testing. Some methods are needed to accelerate the test.

Duty cycles need attention. For example,

- A missile experiences a very long time in storage, transportation, and operational standby mode followed by a very short mission time. The affect of storage, transportation, and during standby mode needs to be understood.

- Automobile usage includes a long time with the engine off and the vehicle stationary. However, vehicles have systems like remote keyless entry systems that are continuously operating. The customer expects that a click on a key fob will lock or unlock the doors, start or stop the engine, chirp the horn, etc. Then the duty cycle for 10 years should be 87,600 hours of operation. Obviously, this component needs an accelerated test.

The large samples sizes required to support reliability verification are very costly and consume a lot of resources.

Conclusions

- Reliability verification tests plans can employ a test to bogy with zero failures allowed. The sample sizes required are provided .

- High reliability and confidence targets drive large sample sizes, so other methods should be considered.

- Accelerated tests that reduce test time, sample sizes, and allow failures are desired.

Note

If you want to engage me on this or other topics, please contact me. I offer a free hour for the first contact to discuss your problem/concerns and to determine how I can help you.

I have worked in Quality, Reliability, Applied Statistics, and Data Analytics over 30 years in design engineering and manufacturing. In the university, I taught at the graduate level. I also provide Minitab seminars to corporate clients, write articles, and have presented and written papers at SAE, ISSAT, and ASQ. I want to help solve your design and manufacturing problems.

Dennis Craggs, Consultant

810-964-1529

dlcraggs@me.com

Ask a question or send along a comment.

Please login to view and use the contact form.

Ask a question or send along a comment.

Please login to view and use the contact form.

There is a continuation of this item – http://nomtbf.com/2016/05/lifetime-evaluation-vs-measurement-part-3/#more-1897

Great article

Thanks Michael. There should probably be followup articles on other types of data. One that immediately comes to mind is survey data. Generally, there are two or more responses to a question. Each response can be modeled with a binomial. With enough survey responses, each answer to a question is approximately normally distributed. So how many surveys need to be returned to achieve a desired confidence?