Guest Post by Geary Sikich (first posted on CERM ® RISK INSIGHTS – reposted here with permission)

Many aspects of risk management are deeply rooted in mathematical formulae for determining probability. This heavy dependence on mathematics to determine probability of risk realization may create “false positives” regarding a risk that can be either positive or negative. There is also a limitation on how much data can be gathered and assessed in respect to the development of the probability equation regarding the risk being assessed.

A simple Definition of uncertainty; the quality or state of being uncertain, something that is doubtful or unknown requires us to know what uncertain is. A simple Definition of uncertain; not exactly known or decided, not definite or fixed, not definitely known should lead us to a recognition that calculating the probability of uncertainty is, if not impossible, surly extremely difficult and time consuming.

When we identify a risk we immediately attempt to quantify it in terms of probability of occurrence (realization). Probability is the chance that something will happen; a logical relation between statements such that evidence confirming one confirms the other to some degree. Probability is often affected by biases of the observer, leading, again, to potential “false positives” regarding the risk being assessed. Hence, one has to consider Heisenberg’s “Uncertainty Principle” which generally is stated as: “any of a variety of mathematical inequalities asserting a fundamental limit to the precision with which certain pairs of physical properties of a particle, known as complementary variables, such as position x and momentum p, can be known”. Introduced first in 1927, by the German physicist Werner Heisenberg, it states that the more precisely the position of some particle is determined, the less precisely its momentum can be known, and vice versa.

Uncertainty: Exploring Risk

Risk is all about uncertainty. There is uncertainty associated with identification, recognition, mitigation, establishing and maintaining risk parity, etc. There is a negative and a positive side of risk; or to be clearer, there are negatives and positives that represent multi-dimensional aspects of risk. Where does potential, unrecognized value reside? Where are the negative pitfalls that lurk in the “false positives” created by risk compliance? Viewing risk through a multi-dimensional lens can facilitate the identification and management of risk. Think of risk in terms of a kaleidoscope; when viewed, a simple twist can change the entire picture, perspectives and analyses.

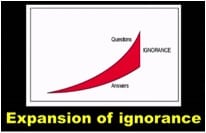

“If you want an answer today you ask a machine,” says Kevin Kelly, author of “The Inevitable”. The following figure, entitled, “Expansion of Ignorance” depicts the quandary that we have with uncertainty. What Kevin Kelly refers to as “ignorance”. In effect it is not ignorance per se, rather it is the uncertainty created by having an answer and realizing that you have created more questions as a result.

Kelly goes on with the following statement: “Ignorance is opportunity, ignorance is often profit; ignorance is where we’re going to live in this world of much more uncertainty.”

Flying Behind the Plane: Risk Management Today

Fundamental uncertainties derive from our fragmentary understanding of risk and complex system dynamics, and abundant stochastic variation in risk parameters. Uncertainty is not just a single dimension, but also surrounds the potential impacts of forces such as globalization and decentralization, effects of movements of global markets and trade regimes, and the effectiveness and utility of risk identification and control measures such as buffering, use of incentives, or strict regulatory approaches.

Such uncertainty underpins the arguments both of those exploiting risk, who demand evidence that exploitation causes harm before accepting limitations, and those avoiding risk, who seek to limit risk realization in the absence of clear indications of sustainability. Hence, probability, while providing some useful indicators, is not a good predictor of risk realization. There is just too much complexity and unknowns to accurately predict the probability of risk occurring at any given time. With the complexity of business and government today; and the heavy dependence on information systems and automation, determining probabilities become less useful due to the acceleration effects created by uncertainty. For example, ask the question: “Who owns Cyber Risk?”; you will get any number of answers depending on the perspective of the individuals being asked the question.

At a recent conference on disaster management that I participated in the following equation was offered by one of the speakers:

Threat X Vulnerability X Impact = Risk

I would argue that this equation provides the illusion of risk, not the reality of risk. For example, you conduct a risk assessment and determine that there is a threat (i.e., possibility of terrorist attack using a scale of 1 – 10 with 1 being not likely and 10 being extremely likely). Now you have to determine how vulnerable you are to this threat (i.e., say on a scale of 1 – 10 with 1 being not vulnerable and 10 being extremely vulnerable). Next, you determine the impact, again using the scale of 1 – 10 with 1 being no effect and 10 being extreme effect. You calculate according to the above equation and come up with a number. Now you begin to seek to determine the probability. You establish the probability using a scale of say on a scale of 1 – 10 with 1 being not probable and 10 being extremely probable. The result is a risk ranking. However, this process does not take into account observer bias or uncertainty. Uncertainty actually would carry more weight that observer bias simply because of all the unknowns that uncertainty presents. So, one may wish to re-write the equation as follows:

Threat X Vulnerability X Impact = Risk (current state)

Uncertainty

Since the risk that we have identified is not static, uncertainty becomes more of a factor over time than probability, threat, vulnerability and impact. Over time the risk will change, especially due to the fact of uncertainty, non-static nature, potential unintended consequences, etc. Therefore the scale for uncertainty could be a positive or a negative number that extends to infinity. Risk assessment based on probability of occurrence is, in itself, a risky decision.

The Need for Risk Parity

Risk Parity is a balancing of resources to a risk. You identify a risk and then balance the resources you allocate to buffer against the risk being realized (that is occurring). This is done for all risks that you identify and is a constant process of allocation of resources to buffer the risk based on the expectation of risk occurring and the velocity, impact and ability to sustain resilience against the risk realization. You would apply this and then constantly assess to determine what resources need to be shifted to address the risk. This can be a short term or long term effort. The main point is that achieving risk parity is a balancing of resources based on assessment of risk realization.

Risk Parity is not static as risk is not static. When I say risk is not static, I mean that when you identify a risk and take action to mitigate that risk, the risk changes with regard to your action. The risk may increase or decrease, but it changes due to the action taken. You essentially create a new form of risk that you have to assess with regard to your action to mitigate the original risk. This can become quite complex as others also will be altering the state of the risk by taking actions to buffer the risk. The network that your organization operates in reacts to actions taken to address risk (i.e., “Value Chain” – Customers, Suppliers, etc.) all are reacting and this results in a non-static risk.

A good example would be the purchase of, say 100 shares of a stock. You have a risk that the stock will decline in value (downside risk); you might decide to sell a call option to offset the downside risk or place a stop loss order to minimize your loss. In essence you have changed the risk (non-static). The call option also creates a new risk; that is the risk that you may have the stock called away if it breaks the strike price. This will limit your profit on the stock (upside risk). In any event you have altered the risk and it has become non-static due to your actions and/or the actions of others within your network and external to your network. This gets us to non-aligned risk which is a risk that is influenced by nonlinear reaction.

I think that “relevance” is a very significant word relative to KRI’s. You can have an extensive list but if they are not relevant to the organization and its operations they do little to enhance the risk management efforts. That said, we have to assess non-linearity and opacity with regard to the potential obfuscation of “relevance”.

Conclusion

Traditional approaches to Business Continuity, Disaster Recovery, Crisis Management, Emergency Response and concepts such as Incident Command, National Incident Management System, etc. are faced with “new ground” so to speak, as traditional approaches may not be as effective in dealing with the risk realities faced today. Uncertainty is the nature of risk, hence the projection of risk in terms of probability of occurrence can only provide limited value for a short period of time. In addition, much of our planning is reactionary, driven by media hype, high profile events that have a limited lifespan (i.e., Ebola Crisis and subsequent surge in planning and uncertainty). We also need to get away from purely tactical planning, that is, reacting to the last event. Threat dynamics are changing resulting in more uncertainty not less; this requires a planning approach that integrates, tactical, operational and strategic planning, combining continuity, emergency, crisis, disaster and contingency planning into an integrated process.

In order for any organization to succeed in today’s fast paced, globally interlinked business environment the ability to identify and assess risk and to reduce uncertainty risk needs to be addressed. When you take Management (leadership & decision-making), Planning, Operations, Logistics, Communications, Finance, Administration, Infrastructure (Internal & External), Reputation, External Relations and other dependency issues into account there is significant impact on six areas that I consider critical for organizations – Strategy (Goals & Objectives), Concept of Operations, Organizational Structure, Resource Management, Core Competencies and Pragmatic Leadership (at all levels with a common understanding of terminology).

We live in a world full of consequences. Our decisions need to be made with the most information available with the recognition that all decisions carry with them flaws due to our inability know everything; uncertainty. Our focus should be on how our flawed decisions establish a context for flawed Risk, Threat, Hazard, Vulnerability (RTHV) assessments, leading to flawed plans, resulting in flawed abilities to execute effectively. If we change our thought processes from chasing symptoms and ignoring consequences to recognizing the limitations of decision making under uncertainty we may find that the decisions we are making have more upside than downside.

(C) Gary W. Sikich, 2016. World rights reserved. Published with permission.

About the Author

Geary W. Sikich is the author of “It Can’t Happen Here: All Hazards Crisis Management Planning” (Tulsa, Oklahoma: PennWell Books, 1993). His second book, “Emergency Management Planning Handbook” (New York: McGraw-Hill, 1995) is available in English and Spanish-language versions. His third book, “Integrated Business Continuity: Maintaining Resilience in Uncertain Times,” (PennWell 2003) is available on www.Amazon.com. His latest book, “Protecting You Business in a Pandemic,” (Greenwood Publishing) is available on www.Amazon.com. Mr. Sikich is the founder and a principal with Logical Management Systems, Corp. (www.logicalmanagement.com), based near Chicago, IL. He has extensive experience in management consulting in a variety of fields. Sikich consults on a regular basis with companies worldwide on business-continuity and crisis management issues. He has a Bachelor of Science degree in criminology from Indiana State University and Master of Education in counseling and guidance from the University of Texas, El Paso.

Ask a question or send along a comment.

Please login to view and use the contact form.

Ask a question or send along a comment.

Please login to view and use the contact form.

Thanks for this great article.

First, I really like that fact that you are not blending the idea of measurement errors with the idea of uncertainty. Many comparable sources do that. The GUM 1995 specification seems to be a major source of confusion here, since it works with the following definition of uncertainty:

„uncertainty (of measurement): parameter, associated with the result of a measurement, that characterizes the dispersion of the values that could reasonably be attributed to the measurand „

Uncertainty here is declared to be an expressible parameter, so the full tool chain of stochastic can be applied. I am convinced that this is not good enough, and the article seems to point into the same direction. Uncertainty is about doubts and trust into a piece of information. It is a subjective thing. Measurement uncertainty or experimental uncertainty, as many sources also call it, is an objective thing.

From my limited point view, one root cause for the problem is that most of the risk analysis literature is obsessed with aleatory uncertainty, which is about phenomena that are random in nature. This is not a surprise – physical faults give you this kind of uncertainty. When you think about the history of risk and reliability analysis, it all started with these kinds of issues. We still rely on the methods developed for them.

The problem is that we live now in a world where aleatoric uncertainties are the smaller problem. We are facing a networked world of software / hardware combinations, in which complexity and pace of development heavily increase the epistemic uncertainty. Your risk analysis input becomes increasingly doubtful, not due to its underlying nature, but due to the fact that you can’t keep up. Facts about system parts change all the time, tons of details are hidden, complexity and interdependencies explode in their size.

Good times ahead for research, I would say.

Thanks for the kind words, Peter, much appreciated. Thanks for highlighting the common confusion around uncertainty and measurement errors. I agree, ample content for future research. May find a good audience for research when linked to risk-based decision making. cheers, Fred