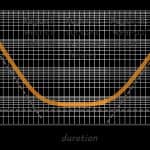

When is the biggest ‘improvement’ in the reliability of a new ‘type’ of product? This is a very broad question with no doubt lots of answers that aren’t wrong. But what we do know is that the more experience we have with building something, the more reliable it gets. And this effect is most marked at the start of the product’s life. [Read more…]

Reliability in Emerging Technology

The only thing that doesn’t change is change itself. We are constantly exposed to new and better products, services that are more efficient, and things that generally make our lives better.

But how long will they work for? … and will they be safe?

And we often get it wrong. Toyota vehicles of the early 2000s had a problem with their new electronic throttle control system that saw them accelerate without warning – reliability was not the priority it needed to be. But autonomous vehicles are perhaps faced with an over-abundance of caution bordering on trepidation, meaning that the 95 per cent of road deaths caused by human error are still happening as the technology ‘drives unused.’ And then there are the new products that you either never hear of or can barely remember because they barely worked long enough for customers to enjoy. Budding entrepreneurs forget that there is a difference between time to market and time to market acceptance.

So what are we to do? The answer involves a healthy dose of historic ‘reliability-principles’ with a blend of tailored approaches that goes (well and truly) beyond a ‘checklist’ or ‘compliance’ approach. So how do we get that mix right? That is the question.

Small Satellites, Emerging Technology and Big Opportunities (part five of seven) – Stop solving the problem you want to solve

InnoCentive CEO Dwayne Spradlin wrote in this article about how many companies in a rush to develop something new and innovative try to solve a problem that they want to solve rather than the problem the customer wants to be solved. He quotes Albert Einstein who once said

If I were given one hour to save the planet, I would spend 59 minutes defining the problem and one minute resolving it.

Small Satellites, Emerging Technology and Big Opportunities (part four of seven) – Lets Learn about Reliability

Imagine you are not feeling well and you go to a doctor. When you sit down in the doctor’s office, he doesn’t even look at you. Instead, he has a book opened on the desk and starts reading it aloud. You sit there without being examined, listening to a list of medicines, activities, exercises and diet changes. The doctor then looks up and says that you need to do all of them. Take all the medicines. Change all your activities. Start doing all the exercises. And completely change your diet. [Read more…]

Small Satellites, Emerging Technology and Big Opportunities (part three of seven) – No, we really mean ‘Mission Assurance’

In the late 1970s, Hewlett Packard was a company that valued quality compliance, certification and awards. But the then Chief Executive Officer noticed a problem. He (on a hunch) initiated an analysis of ‘quality related expenses.’ He wanted to quantify the cost of defects and failure. The results were terrifying. [Read more…]

Small Satellites, Emerging Technology and Big Opportunities (part two of seven) – Compliance and the Antithesis of Performance

In 1995, the United States Department of Energy (DoE) funded research into Princeton University’s Plasma Physics Laboratory (PPPL). PPPL was developing plasma fusion techniques, and the research in question focused on quality assurance within the laboratory. It was investigating the utility of a new type of quality assurance: on that was performance-based. [Read more…]

Small Satellites, Emerging Technology and Big Opportunities (part one of seven) – Reliability and Awesome New Things

Two rockets launched from Earth in November 2013. They carried a total of 61 small satellites from 20 different manufacturers. A satellite that is less than 500 kg in mass is considered ‘small.’ But small satellites are unique in many other ways. Old and ‘big’ satellites are massive, multi-billion dollar machines that take years to build and are the ‘only shot’ at achieving a mission. A ‘big satellite’ that stops working is a disaster. If a ‘small satellite’ fails, there can be many others floating around Earth to pick up the slack. [Read more…]

Autonomous Vehicles and Safety – Why Industry has Every Right to Not Wait for Regulatory and Academic Leadership to Arise

What are legislators, regulators and academics doing to help the introduction of Autonomous Vehicles (AVs)? I don’t know either.

One of the sessions of the 2017 Autonomous Vehicle Safety Regulation World Congress that was held in Novi, Michigan, was devoted to ethics. The idea is that AVs must be taught what to do when death is unavoidable (hold that thought). That is, if an accident is imminent, does the AV kill the old lady or the three-month-old baby? Does the AV protect the driver or others around it? Many media outlets, journals and blogs emphasize this conundrum. The MIT Review published Why Self Driving Cars Must be Programmed to Kill where it discussed the behaviors that need to be embedded into AVs to control casualties. Some of you may be familiar with MIT’s Moral Machine which is an online survey aimed at understanding what the public thinks AVs should do in the event of an accident that involves fatalities.

But this discussion has conveniently hurdled the question – do AVs need to be programmed to kill? Because the answer is absolutely not. There is no compelling argument for anyone to expect manufacturers to design this sort of capability into their vehicles. In fact, it is likely going to make matters worse.

Autonomous Vehicle Regulation – Could Less Actually Be More?

Autonomous Vehicles (AVs) are still futuristic – but there are plenty of people are thinking about them and what they would mean – particularly as they relate to safety.

And when they do, they invariably think about how vehicles are currently regulated as a starting point. We envisage perhaps more regulation, standards and rules – because AVs are more complex and complicated. But for every regulation, standard and rule, we take responsibility away from the manufacturer.

Why?

Because all the manufacturer needs to do is ensure that their AV meets each regulation, standard and rule for them to not be liable for subsequent accidents (this is a simplistic interpretation to be sure … but satisfactory for the sake of this article).

Is this desirable?

Is this possible? [Read more…]

Autonomous Vehicle Regulation

could less actually be more?

Autonomous Vehicles (AVs) are still futuristic – but there are plenty of people are thinking about them and what they would mean – particularly as they relate to safety. And when they do, they invariably think about how vehicles are currently regulated as a starting point.

We envisage perhaps more autonomous vehicle regulation, standards and rules – because AVs are more complex and complicated. But for every regulation, standard and rule, we take responsibility away from the manufacturer.

Why? Because all the manufacturer needs to do is ensure that their AV meets each regulation, standard and rule for them to not be liable for subsequent accidents (this is a simplistic interpretation to be sure … but satisfactory for the sake of this article).

Is this desirable? Is this possible?

Red Flags and Autonomous System Safety

and the importance of looking back before looking forward

Have we gone through the introduction of autonomous vehicles before? In other words, have we gone through the introduction of a new, potentially hazardous but wonderfully promising technology?

Of course we have. Many times. And we make many of the same mistakes each time.

When the first automobiles were introduced in the 1800s, mild legislative hysteria ensued. A flurry of ‘red flag’ traffic acts were passed in both the United Kingdom and the United States. Many of these acts required self-propelled locomotives to have at least three people operating them, travel no greater than four miles per hour, and have someone (on foot) carry a red flag around 60 yards in front. [Read more…]

Ask a question or send along a comment.

Please login to view and use the contact form.

Ask a question or send along a comment.

Please login to view and use the contact form.