In the late 1970s, Hewlett Packard was a company that valued quality compliance, certification and awards. But the then Chief Executive Officer noticed a problem. He (on a hunch) initiated an analysis of ‘quality related expenses.’ He wanted to quantify the cost of defects and failure. The results were terrifying.

For the manufacturing process alone, 25 per cent of Hewlett Packard resources were associated with rectifying faults and defects. So Hewlett Packard initiated the ‘10 X’ project. Instead of focusing on complying with standards, a performance objective was created: the reduction of product failure rates by a factor of 10 over a period of 10 years. With a fully committed management team, the results were startling. While they did not quite reach their target, Hewlett Packard failure rates were reduced to 12.6 per cent. Not by 12.6 per cent. To 12.6 per cent. A reduction of 87.4 per cent. They saved $ 808 million in warranty costs alone. Stockholding savings were in the order of billions of dollars.

American conglomerate Du Pont effectively did the same thing in 1986. They too found that despite their industry leading quality compliance, certification and awards, 25 per cent of their manufacturing resources were tied up with rectifying defects and faults. They instigated a philosophically similar program to Hewlett Packard and realized similarly startling results. They characterized their transition as moving toward a learning organization.

These are but two examples of what happens when organizations replace ‘complying’ with ‘thinking.’ Two things are brutally clear:

Not focusing on performance is not assuring reliability, safety, availability or a mission.

Focusing exclusively on compliance provides not much beyond (a misleading) peace of mind.

This is the third in a series of articles examining emerging technologies and reliability – inspired by my recent experiences in the small satellite industry. Small satellites are an emerging technology and are doing tremendous things in an industry that is not ‘used’ to them. But there are problems. Problems like those faced by any number of new products or systems that have changed the status quo.

The previous two articles set the scene for this series and talked about compliance. That is, saying something is good, safe, or reliable if a checklist of activities has been completed during design and manufacture. But as Hewlett Packard and Du Pont showed, compliance does not work.

This article talks about what needs to replace compliance. This is where the term mission assurance becomes important. There is no such thing as mission assurance in the literal sense. There is nothing we can do to absolutely guarantee that a hardware and software system will never fail – even during a particular timeframe. It gets more challenging when we try to understand failure probability. Common reliability demonstration test approaches (such as those outlined in MIL-HDBK 781A) never entirely remove decision risks. You can only ever demonstrate that something has ‘met’ a reliability requirement when you have first specified your tolerance for the probability that you are wrong (confusing right?) And this is not to mention many of the other arbitrary assumptions such as a constant hazard rate that make the outcomes of a reliability demonstration-based assurance activity decidedly uncertain.

But every time we talk about something being ‘good’ by talking about what the design team has done and not what the system can do, we invariably see problems. So we need to go back to articulating exactly what the thing we are designing must do. Not what the people making it must do.

Defining the System: Defining the Mission

Most textbooks or guidebooks on mission assurance focus on one thing: the reliability, availability and maintainability (RAM) performance of a single system. For example, most small satellite mission assurance frameworks equate the system to be a single satellite. The same goes for cars and automobiles.

But this is increasingly short sighted. For satellites, the system boundaries now need to include the ground segment, the launch segment and any other satellites as part of the overall system. For autonomous vehicles, the system needs to include the networks, software and safety updating process that takes place in an office building thousands of miles away from where the car is being driven.

But returning to satellites, in no document that claims to deal with satellite mission assurance is there any text dedicated to a constellation. For those of you new to the small satellite industry, a constellation is a group of satellites that are working together toward a single mission, function or service (not a group of stars). Not talking about constellations for small satellite mission assurance is a problem.

Planet.com is a company that operates several small satellite constellations. Its mission is an example of what ‘true missions’ look like:

the ability to image all of Earth’s landmass every day.

This is not the mission for a single satellite. Planet.com needed to launch and operate 149 satellites to do this. These 149 satellites provide redundancy. Small satellite mission design therefore needs to consider the constellation size. Individual satellite RAM characteristics are no longer a sole measure of mission assurance because the system includes the constellation. Or to be more correct, the system is the constellation and all ground-based support infrastructure. Focusing on RAM characteristics means we lose one of the primary benefits of small satellite technology.

Traditional large satellites could more realistically be treated as single systems. And traditional mission assurance revolves around the idea of single equipment equaling single systems. But what it if is more cost-effective to launch many ‘unreliable’ small satellites in lieu of fewer ‘reliable’ satellites? The effect this has on the commercial calculus is a profound opportunity. One that disappears when we impose a compliance-based mission assurance framework.

This does not mean we aren’t interested in individual small satellite reliability. But much like the way Du Pont characterized its transformation to a learning organization, small satellite mission assurance needs to focus on learning about satellite reliability as an enabling activity for a much broader discussion on mission assurance. Much can be done to improve small satellite reliability. It is the why, what and the how much that drives decision making.

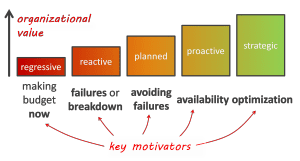

It is perhaps useful to introduce Winston Ledet’s model of Organizational Operation Domains which is illustrated below. Ledet worked at Du Pont during its transformation into a ‘learning organization’. He has since become a leader in the area and has published multiple papers and books on the topic. His model of Organizational Operation Domains is simple to look at but complicated enough for its own series of articles. For now, the illustration below will suffice.

Ledet Model of Organizational Operational Domains

If an organization’s available RAM assurance resources are entirely consumed by potentially irrelevant compliance frameworks, that organization can only be regressive or reactive.

What? What if the standard or checklist you follow includes preventive measures for failure mechanisms? Well that is great, if those are the only failure mechanisms that matter. But they aren’t. An organization that moves into a planned operational domain is starting to understand the failures it is experiencing. And remember, there is no product, system or device that has successfully prevented its way to a state of zero failure probability. Compliance (by definition) maintains the status quo.

With no scientific focus on the specific failure mechanisms of the new thing they are producing, there is no scope for anything to be planned, proactive or strategic.

Thinking guided by standards – not standard thinking

By now it should be clear that the concept of mission assurance needs to shift from compliance to performance. This means focusing on what the system does, and not what the design team does. In short, we need to say something like:

Mission assurance is an organizational cultural framework that uses and seeks collective knowledge to produce a system that is optimally likely to achieve a performance-based mission objective (or set of objectives).

This is a profound change. This implies that an engineer can choose not to implement a standard mission assurance task. They may have used their expertise to develop a new or better activity that is more suited to the product being developed. Or have determined that the standard in question is not applicable for the system being developed. And for all emerging technologies, this is pretty much the only way.

But you may immediately wonder how can you as a prospective customer ensure that this engineer made the right decision? How do you know that the engineer simply did not make the lazy or easy decision under the unchallengeable guise of engineering judgment? This is the conundrum talked about in the previous article (Observation #3: Compliance is easy, thinking is hard.) Compliance is easy to see. Reliability and availability performance (the outcome of these engineering decisions) is not.

We touch on this challenge later in this article. But first, a small example.

Mini-Case Study: Space Debris

Large numbers of satellites raise the specter of resident space objects (RSOs) which is the technical name for space debris permanently hampering satellite operations. While many new satellites are being built in accordance with voluntary policies on post mission disposal, not all are. What this means is that not all satellites are being designed in a way that they will dispose of themselves (by falling into the Earth’s atmosphere) at the end of their mission. We now have a lot of space junk orbiting earth in relatively uncontrolled ways.

We need to get a better understanding of what this means. And while there are proposals to launch spacecraft specifically to capture RSOs, do we have to?

It may be more cost effective (or easier than trying to get all prospective satellite manufacturers to agree to voluntary standards) to simply accept space debris. That is, launch enough satellites to accommodate RSO impact failure. This means that we also need to know the probability of RSO impact.

A recent analysis suggested that the future ‘worst case RSO scenario’ would involve around one catastrophic low earth orbit collision every two years. This is for all small satellites launched, assuming we will eventually see around 500 launches per year. If we further assume an average mission life of 1 year, we are looking at round a 1 in 1000 chance that a mission will fail due to a catastrophic low earth orbit collision.

A 1 in 1000 chance of failure is very low. In the first article of this series we talk about how there is currently around a 40 per chance of small satellite failure (this is discussed in greater detail in the next article). This is a 2 in 5 chance of the satellite failing without impacting a RSO. So if I had a dollar to invest in making small satellites more reliable, it certainly would not go to removing RSOs in orbit. You would do more good by donating that money to charity.

But some people have assumed something must be done about RSOs without demonstrating why. Any decision to invest in removing RSOs must include a risk-based analysis of the data. Is a 1 in 1000 chance of catastrophic low earth orbit low enough for us to not do anything about it? Probably. And this should be up to the manufacturer (in conjunction with the customer) to discuss.

A compliance-based framework ignores this discussion. Instead we focus on consensus in opinion for all scenarios. This naturally moves to worst case scenario based on plausibility and not probability. This also means mission assurance will involve (potentially costly) design choices to all possible scenarios and not the few probable ones.

If a small satellite is impacted by an RSO, will this cause a small and neat hole? If so, can we manufacture things like solar arrays to accommodate this sort of damage? Does this get put into a standard or mission assurance guide? Do customers then expect all satellites to have these mitigation functions, which may drive up cost, schedule and unreliability in other systems? Are we are still talking about a 1 in 1000 chance of this happening?

This is where performance-based mission assurance always beats compliance. If the likelihood of RSO collision drives design and operational decisions, then we are truly managing risk. We know what it RSO impact means, how much it will cost to mitigate, and what is best for the mission. Should we only launch ‘robust’ small satellites that can withstand RSO damage that cost (for example) 20 per cent more than less robust satellites when there is a 1 in 1000 chance of RSO damage occurring? No.

This is the sort of performance-based decision making we should be encouraging – not destroying by mandating compliance.

There is a need for performance-based mission assurance everywhere … not just in emerging technology.

In 2009, the Montara wellhead located in the Timor Sea (off the north-west coast of Australia) suffered a blowout where the cement that secured the well into the ocean floor failed. The wellhead was extracting oil from the Timor Oil Field. The United States oil and gas industry organizations quickly went to some lengths to explain why such an event could not occur in and around the continental United States. But the Macondo well blowout (Deepwater Horizon disaster) occurred the following year in a very similar way in the Gulf of Mexico. Why?

The United States National Academies of Science investigated what went wrong. Their findings caused them to publish Beyond Compliance: Strengthening the Safety Culture of the Offshore Oil and Gas Industry. This document outlined many of the challenges that the articles in this series have addressed. Both blowout events were very similar, with the root cause buried within (poor) safety cultures that prioritized short term financial gain. And both blowouts occurred on ‘safe’ systems that were deemed compliant by the regulators at the time.

The National Academies made several recommendations that move away from exclusive compliance assurance and regulatory frameworks. The extent to which these are successful is yet to be determined, but their observations mirror virtually everything we have discussed to date in these articles.

The National Aeronautics and Space Administration’s (NASA’s) two space shuttle disasters have become classic case studies for engineering-managerial decision making. The Rogers Commission (which investigated the Challenger Space Shuttle disaster in 1986) found a deeply troubling safety culture in NASA. The failure mechanism on Challenger’s external tank had been known for more than a decade. All engineers voted to delay launch at a meeting with managers as the unseasonably low temperature exacerbated this known problem. The management team overruled them, concerned about the costs it would incur from the Air Force customer whose satellite would be launched by Challenger. The Rogers Commission instituted substantial change to prevent such a disaster. But the changes didn’t work.

The Columbia Space Shuttle failed on re-entry in 2003. The way it failed was different to Challenger. But it was culturally identical. The review into the Columbia disaster went well beyond the physical aspects of failure. It again found a dominant space flight culture that prioritized launch over reliability. This was influenced by funding decisions made in the early 1970s, with NASA being provided substantially less funding than its grandiose vision of space exploration demanded. So the choice was made to invest money into space flight – not reliability. The Columbia Accident Investigation Board (CAIB) concluded that no compliance-based safety assurance framework could overcome this culture. And so the space shuttle program was cancelled. Compliance-based assurance frameworks cannot guarantee ‘success.’ But organizational culture can.

The examples are for all intents and purpose endless. The root cause of the Fukushima disaster was what we call regulatory capture. Employees of the Japanese nuclear regulatory body hoped to be eventually employed by an energy company for many reasons including perceived hierarchy and higher salary. The progression from regulator to operator was a pseudo-formal career stream (and even had its own name.) So regulatory body employees placated and pandered to the energy companies who they hoped would one day employ them. This saw the level of compliance drop dramatically, to the extent that the Tokyo Electric Power Company (TEPCO) ignored its own analysis regarding the tsunami risk that caused the disaster.

In 2015, the United States Federal Motor Carrier Safety Administration (FMCSA) launched an initiative to investigate how vehicle manufacturer practices and behaviors could be used to assess vehicle safety – no doubt partly in response to the Toyota sudden unintended acceleration problem that claimed many lives. These informed the Fixing America’s Surface Transportation (FAST) Act.

So we are seeing a (slow) realization that compliance of itself will not work.

The Toyota sudden unintended acceleration problem is itself an interesting case study. The Toyota Way has long been the inspiration for quality control and reliability performance in industry. Toyota has pioneered manufacturing approaches that have resulted in industry leading reliability (a prize it competes with other Japanese manufacturers for). So what went wrong?

Culture. In 1998, Toyota decided to strive to be the world’s largest automobile manufacturer. To do this, it had to abandon its own principles. Toyota was famous for mutually beneficial relationships with suppliers. It was famous for permitting anyone on its production line to stop production if they saw a problem. It was famous for many other things that simply had to be ignored to become the world’s biggest automobile manufacturer. Which it ultimately achieved. But it also created the conditions for the problems in its vehicles that saw them accelerate uncontrollably.

If nothing else, those focusing on emerging technology mission assurance can take comfort from other industries that are themselves struggling to balance compliance with performance-based assurance. But this goes beyond comfort – it presents an opportunity.

What Next?

We need a better understanding of the reliability of a product that incorporates emerging technology. Why? Because we are championing a risk-based performance framework to assure a mission. And the main risk driver is the risk of failure – or reliability.

It is important to know what drives failure in your system. Even a rudimentary analysis can work out if your main reliability driver is manufacturing quality control versus component degradation. By knowing if something is one or the other, we have a much better idea of what we can do to improve reliability. Importantly, we know what is unlikely to improve reliability.

The next article examines small satellite reliability, and how the data shows that virtually every satellite compliance-based approach to mission assurance is wrong. They focus on the wrong things. And the data is publicly available and relatively well understood.

So why do we keep doing this? Stay tuned.

Ask a question or send along a comment.

Ask a question or send along a comment.

Please login to view and use the contact form.

Chris, the CAIB concurred with my safety requirements and controls implemented on the NSTS program lowering the probability of an incident from debris on the program. The culture changes implemented by program leadership were also corrected. Before returning to normal position, I was asked about how long the Shuttles should fly. It was pointed out to the leadership that the Shuttle had 2 million parts versus the average satellite of 100,000 to 200,000 parts. And that obsolescence in parts to be able to fly the Shuttle as “pristine” would become more and more difficult. The vehicle was designed in the 70’s. I recommended not pushing it much further than 2010. They agreed with that plan. Only when the last flight was delayed did it creep into 2011. There you have it.